RNN #7 - The First Neural-Net Computer

SNARC and the Formative Years of AI

Hello,

In today's issue of Recurrent Neural Notes, we embark on a historical exploration of Artificial Intelligence, tracing its roots from early theoretical models to the pioneering attempts in the field.

This newsletter aims to share personal insights & discoveries from the AI domain, providing a unique view into this vast field in the form of weekly short notes. If you like what you see, consider subscribing!

Have you ever pondered the origins of Artificial Intelligence?

This is a difficult question. It’s hard to precisely pinpoint the inception of AI, as it intersects various fields and techniques, so a better question might be how do you define Artificial Intelligence and then trace the historical milestones of your definition.

Do we start with the modern era of Deep Learning that emerged around 2011, or do we delve into the earlier days of neural networks, dating back to the popularization of the backpropagation algorithm in 1986? Or generalize even more and consider Rosenblatt’s Perceptron in 1958, the concept of Hebbian Learning from 1949, or the foundational model of a neuron by McCulloch and Pitts in 1943? And what about the preceding works like Rashevsky’s neural network models from the 1930s?

Yet, AI is not just about neural networks. We must also consider other approaches like the expert systems that dominated the 70s and 80s, or various techniques leading to intelligent behavior, including basic search methods. Some argue that Machine Learning is essentially 'applied statistics.' Should early statistical or probabilistic models be regarded as the first forays into AI?

Perhaps a more focused question is: When did we start seriously researching and developing AI systems? Key moments like the 1955 “Session on Learning Machines,” the 1956 “Dartmouth Summer Project” (where John McCarthy first coined the term 'Artificial Intelligence'), and the 1958 “Mechanization of Thought Processes” symposium mark significant points in AI's history.

Or, we could take a philosophical route, recognizing ancient attempts at imagining thinking machines, such as the mythical tales of Pygmalion, Daedalus, Hephaestus, and the examples of artificial life, such as Galatea, Talos and Pandora.

While the quest to pinpoint AI's exact origin remains intriguing, let's shift our focus to a more tangible question that might be easier to answer: When was the first real machine built for AI purposes?"

Stochastic Neural Analog Reinforcement Calculator

Marvin Minsky, often associated with his critique of neural networks – notably in his influential 1967 book “The Perceptrons,” which stalled neural network research for years – had a surprisingly different stance during his graduate studies at Princeton. Fascinated by the brain's models, especially the groundbreaking work on artificial neurons by McCulloch and Pitts, Minsky explored the frontiers of universal computation within neural networks, an area his Ph.D. committee skeptically viewed as marginal to mathematics.

Together with Dean Edmonds, Minsky managed to secure funding in 1951 to build a neural-net machine, called the Stochastic Neural Analog Reinforcement Calculator (SNARC). It’s very name hints at its functions:

Stochastic Approach: SNARC's learning process was infused with randomness. Minsky incorporated probabilistic elements to mimic the uncertain and varied nature of biological neural processing.

Analog Computing: Unlike modern digital computers that process binary, the SNARC was an analog computer, using potentiometers, motors and knobs for learning

Reinforcement Learning: At its core, SNARC's learning mirrored what we now know as “reinforcement learning,” drawing upon B.F. Skinner's concept of reinforcing stimuli.

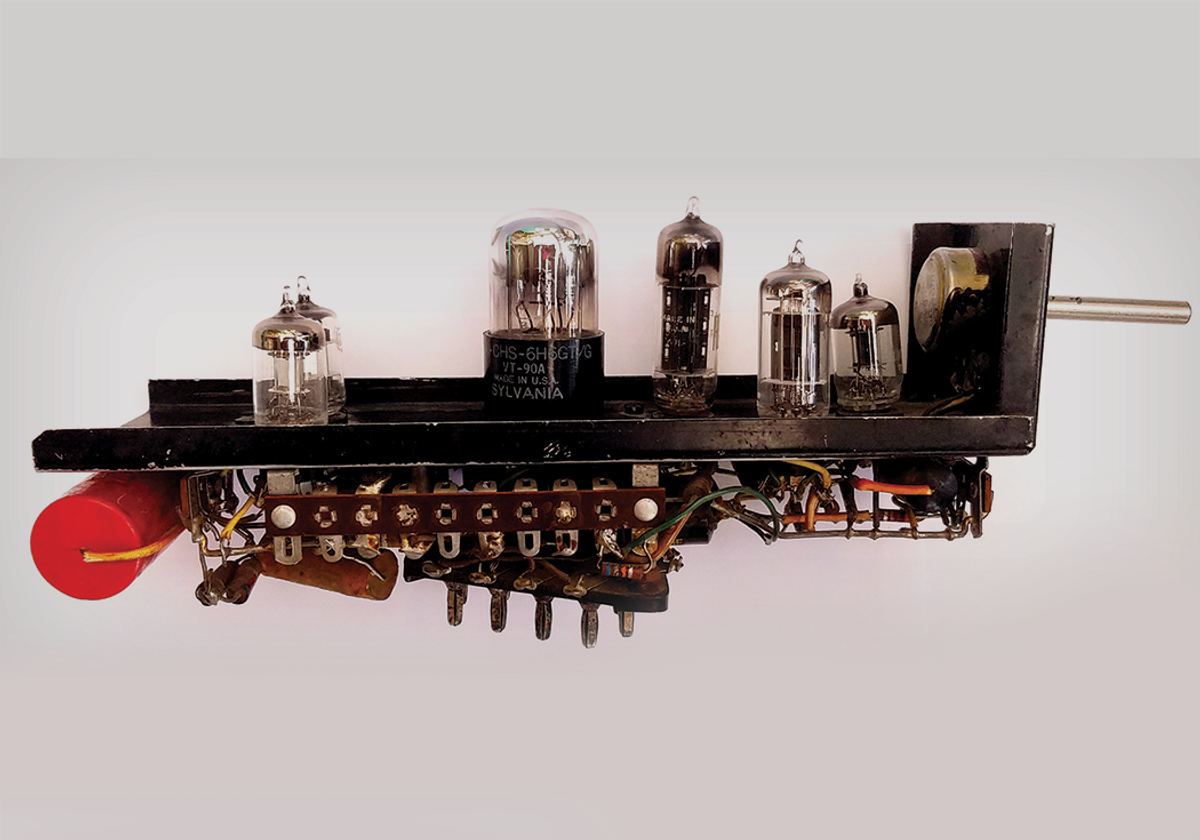

Constructed with 3000 vacuum tubes, numerous motors, control knobs, electric clutches, and a surplus automatic pilot mechanism from a B-24 bomber, SNARC simulated 40 artificial neuron/synapses (based on the concept of Hebbian learning, introduced just 2 years earlier). Each synapse had a memory function associated with positive rewards. Learning occurred as these clutches adjusted the control knobs, reinforcing successful pathways.

SNARC's primary experimental objective was to emulate a rat navigating a virtual maze, learning a path to exit. At first, it would proceed randomly, but over time, successful attempts were reinforced, making it more likely for the machine to follow a similar path in the future. When a rat achieved a goal, resulting in a positive reward, an electric clutch was used to engage a moving chain that turned the potentiometer.

The machine suprised even its creators - due to a fault in the design of the machine, more rats could be “spawned” in the maze. These rats, representing different paths within the machine, could influence each other - they would interact by sharing good paths between each other.

Unfortunately, there are no photographs of the whole assembly of the SNARC, only a single neuron unit:

SNARC is considered one the first pioneering projects of AI - a blend of innovation and vision, marking a pivotal chapter in AI's early days. In future issues, we'll further unravel AI's history, exploring its diverse milestones and the pioneering spirits behind them. If you want to know more about the development of this exciting field, consider subscribing!

Thank you for reading,

Matej Hladky | Recurrent Neural Notes